Master Crawl Budget Optimization for Enterprise SEO Success

Introduction to Crawl Efficiency

In the realm of enterprise-level SEO, content quality is king, but crawlability is the kingdom's infrastructure. If search engines cannot efficiently find and crawl your pages, even the most brilliant content remains invisible. Optimising crawl budget is not merely a housekeeping task; it is a critical lever for growth, especially for e-commerce platforms and large publishers.

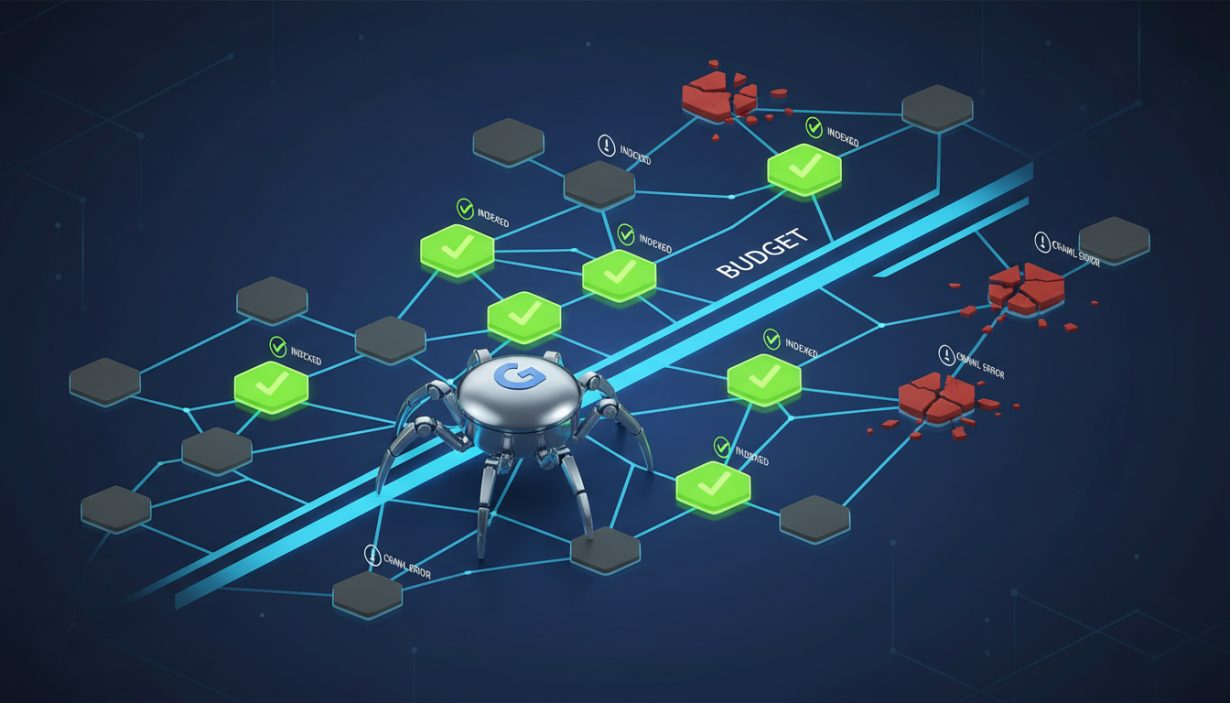

Crawl budget refers to the number of pages a search engine bot (like Googlebot) crawls and indexes on a website within a given timeframe. For sites with thousands or millions of URLs, wasted crawl budget means missed indexing opportunities and stale content in SERPs. This guide dives deep into technical strategies to ensure Google spends its time on your money pages, not your trash.

For foundational knowledge on site architecture, read our guide on technical SEO fundamentals.

The Mechanics: Crawl Rate Limit vs. Crawl Demand

To master optimisation, you must understand the two pillars that define crawl budget. Google does not simply crawl as much as it can; it balances server health with the value of the content.

1. Crawl Rate Limit

This acts as a brake. It represents the maximum number of simultaneous connections Googlebot can make to your site without degrading the user experience or crashing your server. Factors include:

- Server Health: Response codes (5xx errors) and timeout issues.

- Crawl Health: Fast response times (Time to First Byte) allow for more crawling.

2. Crawl Demand

This acts as the accelerator. It represents how much Google wants to crawl your site based on popularity and freshness. Factors include:

- Popularity: High-authority URLs (linked internally and externally) are prioritized.

- Staleness: Google attempts to keep its index fresh by re-crawling updated content.

Here is a breakdown of factors affecting your budget:

| Factor | Impact Level | Optimization Strategy |

|---|---|---|

| Server Response Time (TTFB) | Critical | Optimize databases, use CDNs, and ensure TTFB < 200ms. |

| Low-Quality Content | High | Prune 'thin' pages or consolidate via canonicals. |

| Redirect Chains | High | Fix chains to point directly to the final destination (A -> C, not A -> B -> C). |

| Faceted Navigation | Medium | Use robots.txt or noindex on low-value filter combinations. |

| Sitemap Accuracy | Medium | Ensure XML sitemaps only contain 200 OK, indexable, canonical URLs. |

Identifying Crawl Waste Through Log Analysis

You cannot manage what you do not measure. Log file analysis is the only definitive way to see exactly what Googlebot is doing. By analyzing your server access logs, you can identify patterns where budget is being squandered.

Common sources of crawl waste include:

- Faceted Navigation & Parameters: E-commerce sites often generate infinite URLs through filters (e.g.,

?color=red&size=medium&sort=price). If these are not controlled viarobots.txtor the URL Parameters tool, Googlebot may get trapped in spider traps. - Soft 404s: Pages that return a 200 OK status but contain no content confuse bots and waste resources.

- Hacked Pages: Infinite spam injection pages can drain your budget overnight.

- Legacy Redirects: Old 301s that are no longer relevant but still being crawled due to internal links.

Learn more about conducting a log file audit to visualize these bottlenecks.

Advanced Strategies for Optimising Crawl Budget

Once you have diagnosed the issues, it is time to implement fixes. The goal is to steer Googlebot toward high-value, indexable URLs.

Pruning and Consolidation

Large sites often suffer from index bloat. Identify pages with zero traffic and zero backlinks. You have two choices:

- Improve: If the topic is valuable, update the content.

- Remove: If the content is obsolete, return a 410 (Gone) or 404 status. If it has moved, use a 301 redirect.

Managing Facets and Parameters

For e-commerce, this is non-negotiable. Use robots.txt to disallow crawling of sorting parameters (e.g., Disallow: /*?sort=) while allowing indexing of category facets that have search volume (e.g., specific brand filters).

Internal Linking Structure

A flat site architecture ensures that pages are within 3-4 clicks of the homepage. This increases the PageRank passed to deeper pages, signaling to Google that these pages are important and increasing their crawl demand. Conversely, orphan pages (pages with no internal links) rarely get crawled.