What Robots.Txt Is & Why It Matters for SEO

What Is a Robots.txt File?

A robots.txt file is a set of instructions that tell search engines which pages to crawl and which pages to avoid, guiding crawler access but not necessarily keeping pages out of Google’s index.

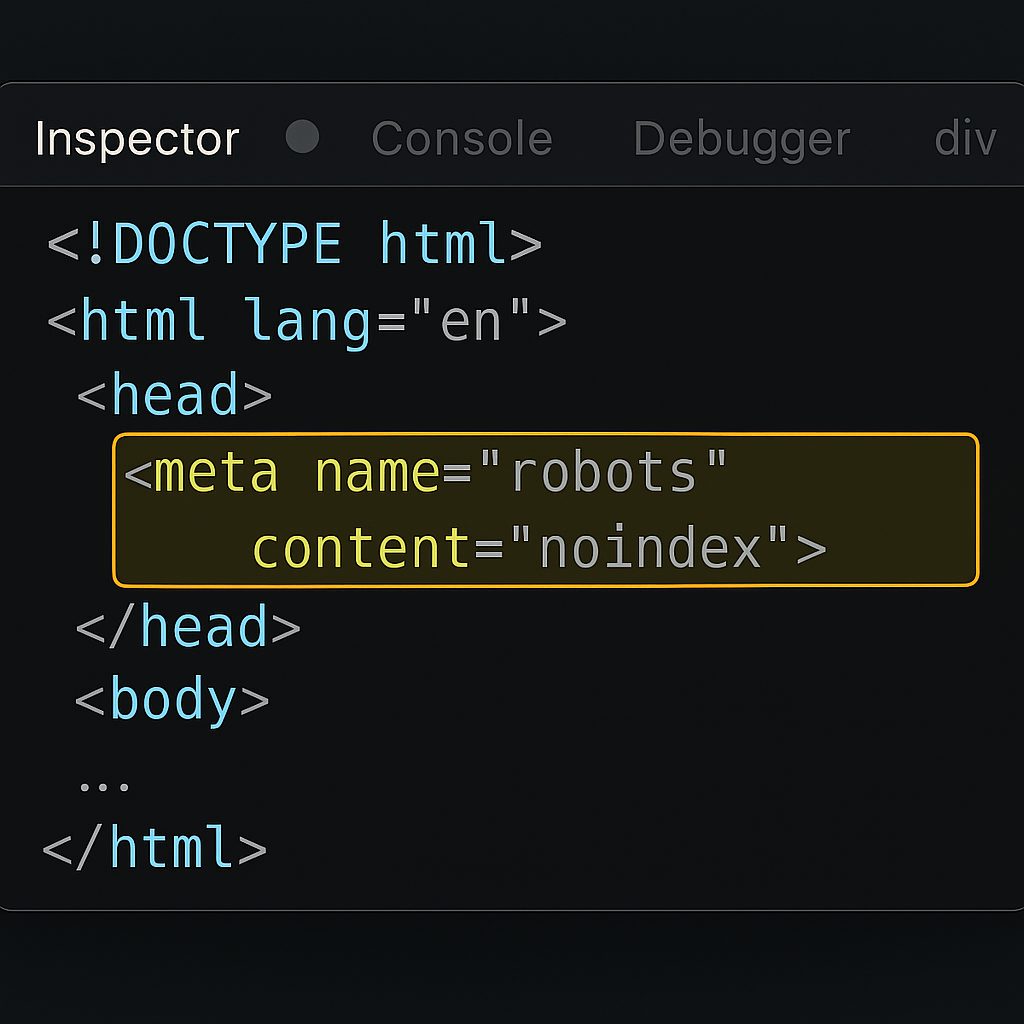

Robots.txt files, meta robots tags, and x-robots tags guide search engines in handling site content but differ in their level of control, whether they're located, and what they control.

- Robots.txt: Site-wide instructions located in the root directory

- Meta robots tags: Page-specific instructions in the <head>

- X-robot tags: HTTP header instructions for non-HTML files

Further reading: Meta Robots Tag & X-Robots-Tag Explained

Why Is Robots.txt Important for SEO?

Robots.txt helps manage crawler activity and keeps bots focused on valuable content.

1. Optimize Crawl Budget

Prevent wasteful crawling of unimportant pages, especially on large sites.

2. Block Duplicate and Non-Public Pages

Useful for staging, login pages, or internal search.

3. Hide Resources

Exclude PDFs, media files, or sensitive directories.

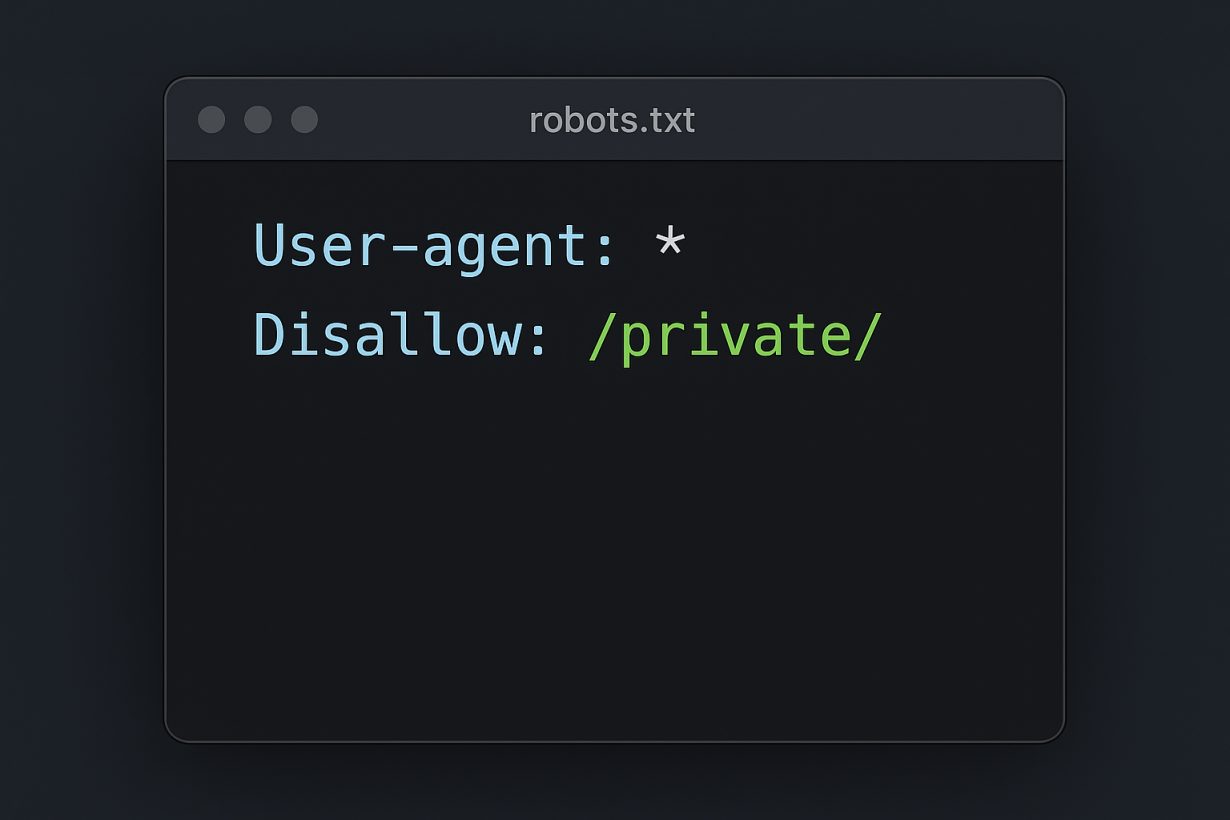

How Does a Robots.txt File Work?

Bots look for /robots.txt before crawling.

User-agent: *

Disallow: /privateYou can target specific bots or all with the * wildcard.

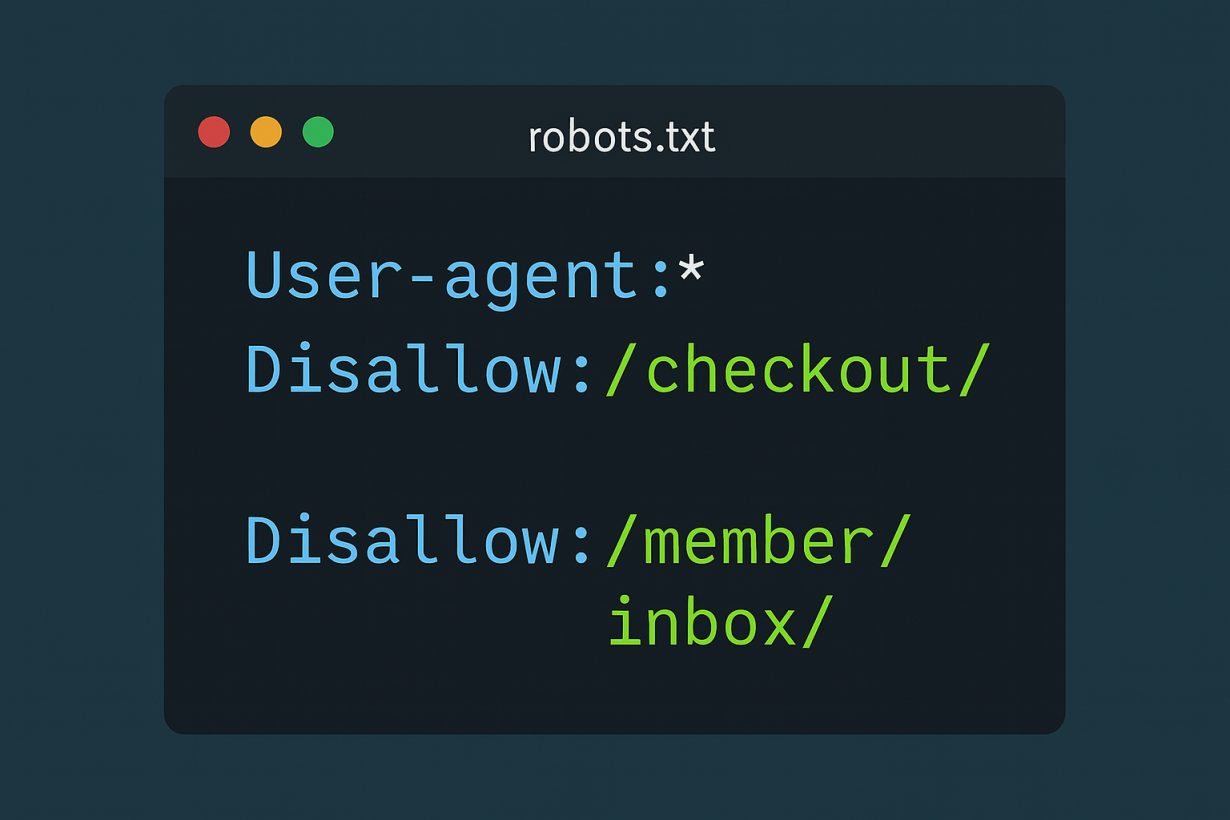

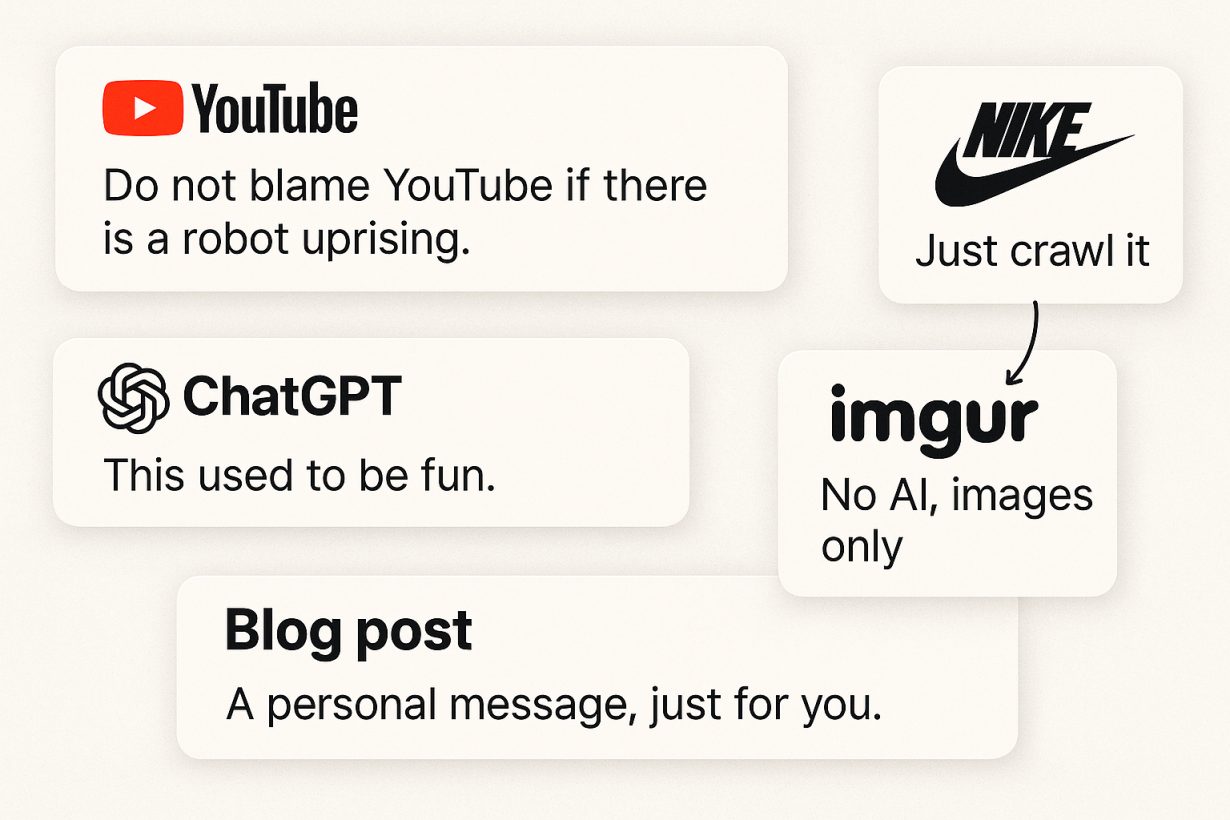

Real-World Examples

YouTube

Blocks user content, feeds, and login pages.

Nike

Blocks checkout and member inbox directories.

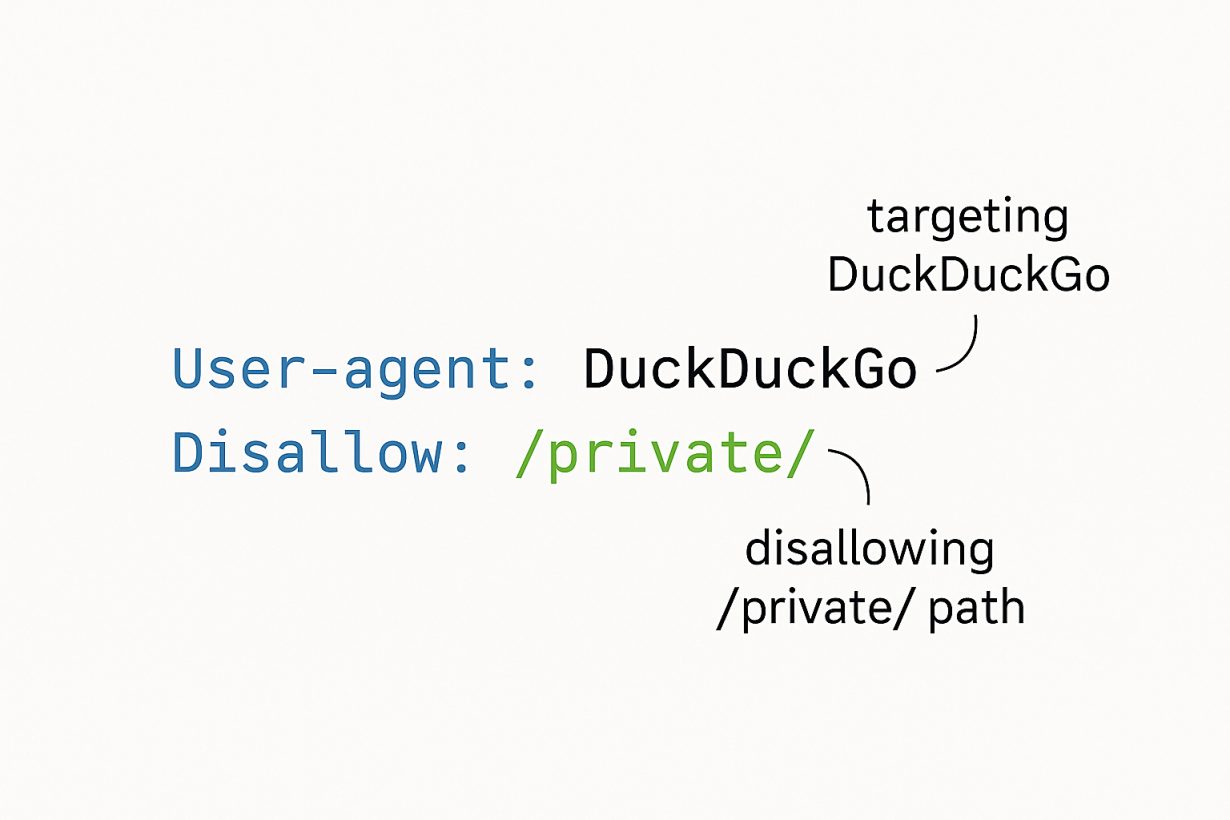

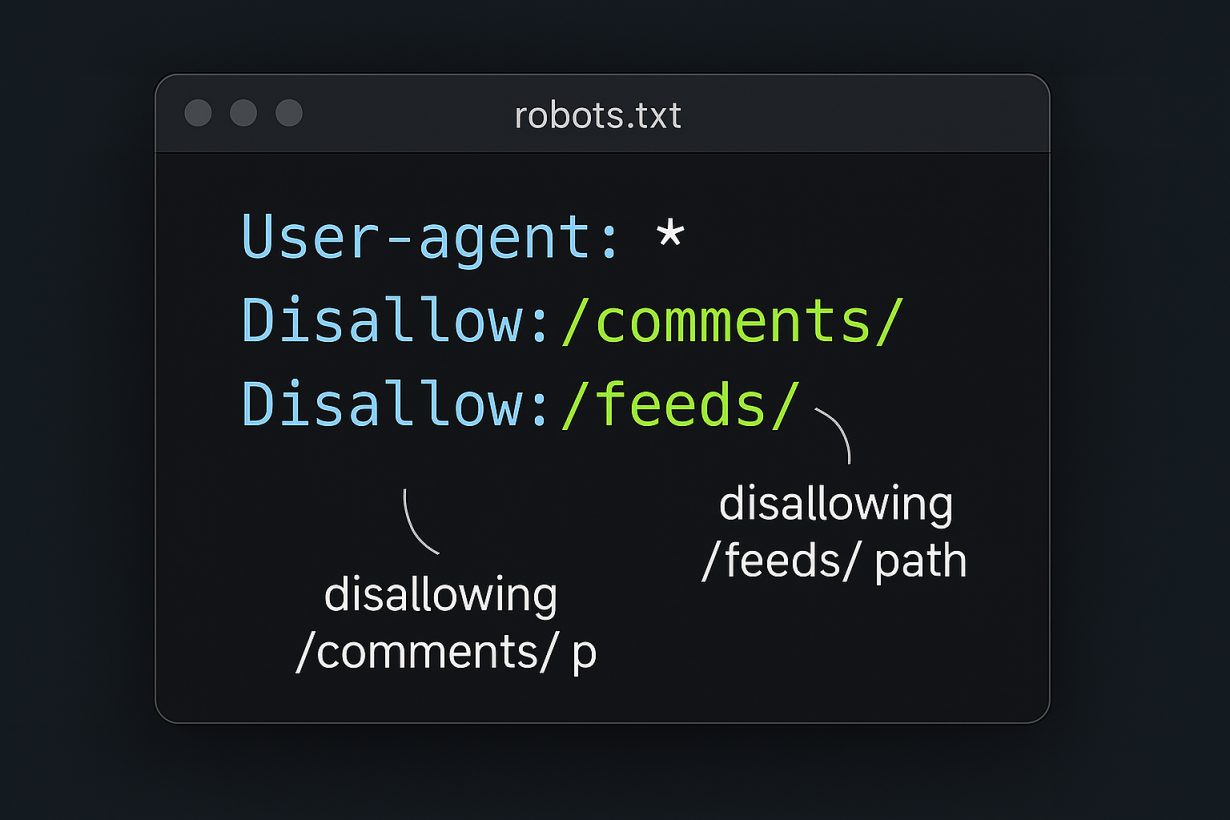

Understanding Robots.txt Syntax

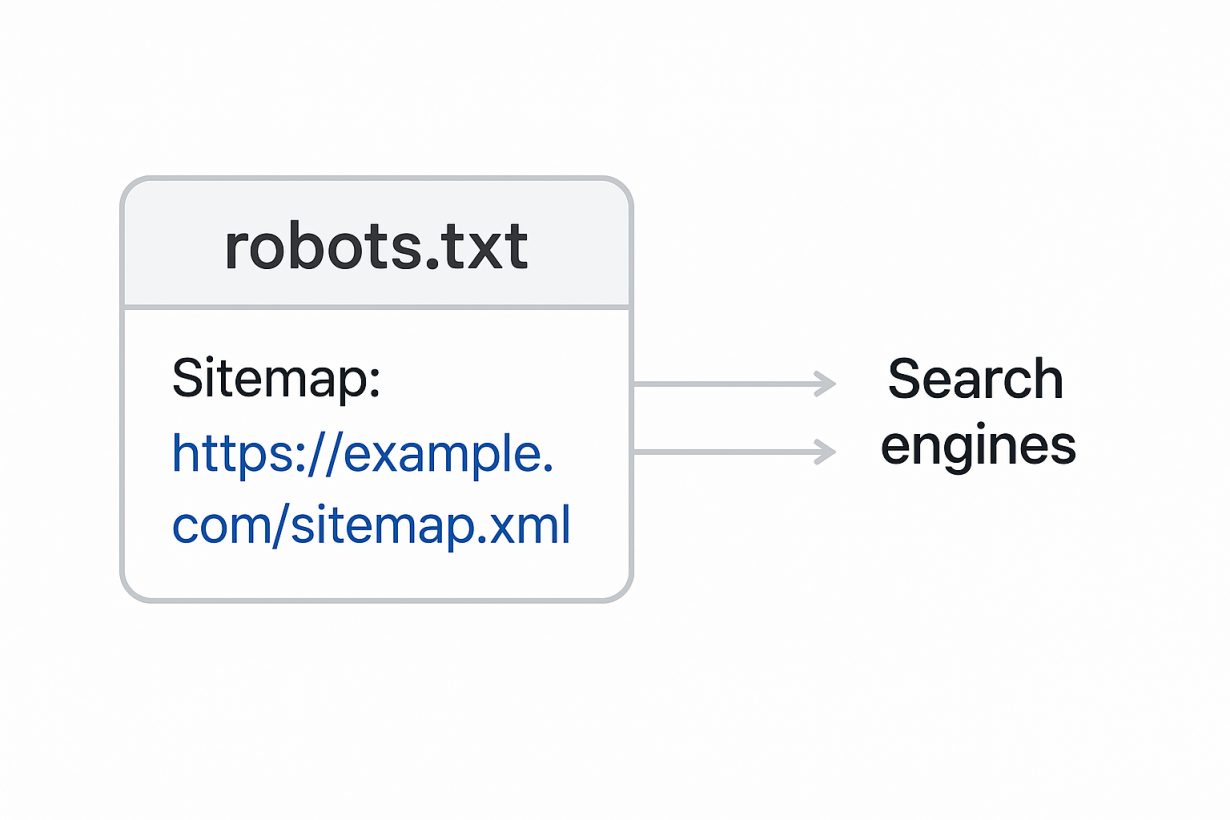

Each block starts with a user-agent and defines rules like Allow or Disallow.

User-agent: *

Disallow: /admin

Allow: /admin/login.htmlYou can also add a Sitemap or Crawl-delay.

Common Mistakes to Avoid

- Placing robots.txt outside root

- Using noindex in robots.txt (unsupported by Google)

- Blocking important resources (JS/CSS)

- Using absolute URLs instead of relative

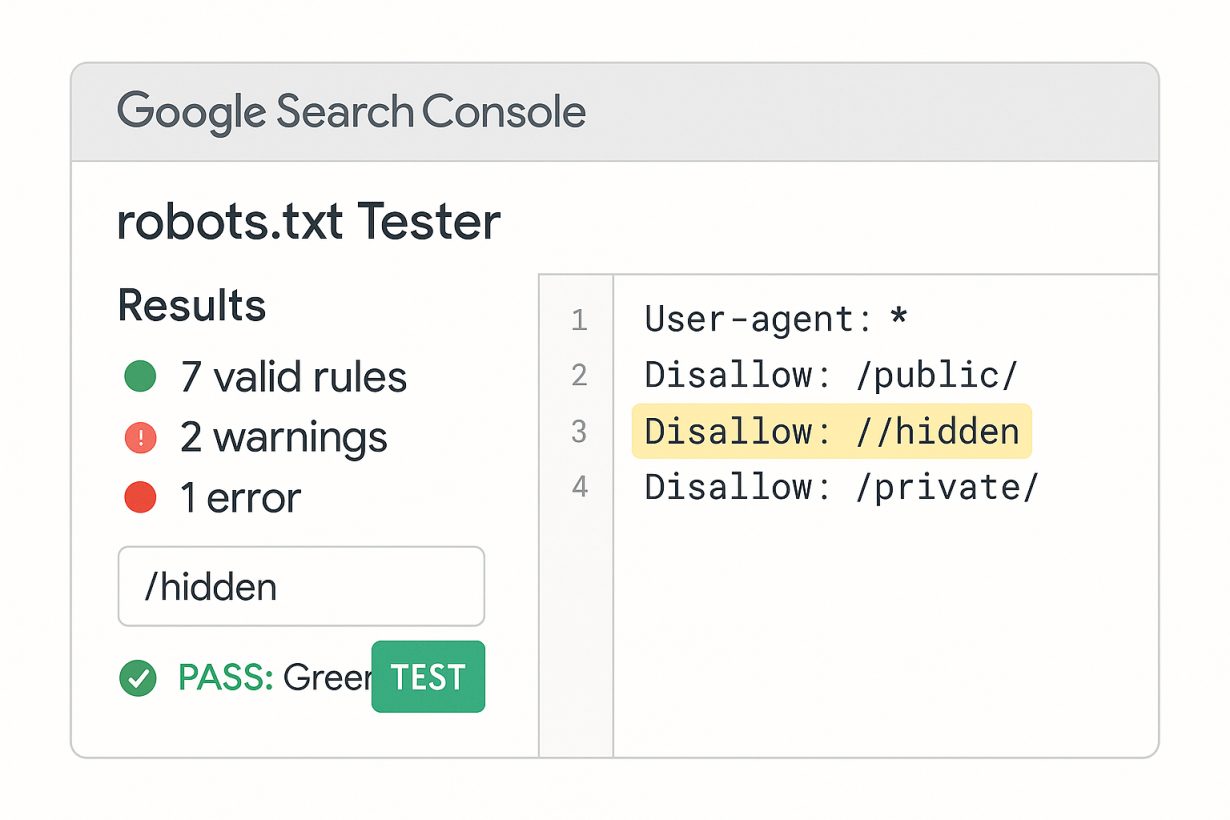

How to Create and Test Robots.txt

1. Create the File

Use a text editor and save as "robots.txt".

2. Add Directives

Structure using groups per user-agent.

3. Upload

Upload to root directory of your site.

4. Test with Tools

Google Search Console, Semrush Site Audit, or open-source libraries.

Best Practices

- One directive per line

- Use wildcards where possible

- Use "$" for end-matching

- Add comments with #

Conclusion

Your robots.txt file is a small but powerful tool. Done right, it improves crawl efficiency, preserves server resources, and keeps bots away from sensitive areas.

Test regularly, and fix any syntax or logic errors to keep your site in good standing.