AI Search Strategies: The Master Guide to Generative Engine Optimization (GEO)

The Shift from SEO to GEO

The era of "ten blue links" is fading. As Large Language Models (LLMs) like ChatGPT, Claude, and Google's Gemini become the primary interface for information discovery, the rules of visibility are being rewritten. We are moving from Search Engine Optimization (SEO) to Generative Engine Optimization (GEO).

To rank in AI, you are not optimizing for a click; you are optimizing for a citation. AI search engines utilize Retrieval-Augmented Generation (RAG) to synthesize answers. If your content isn't structured for machine comprehension, your brand becomes invisible in the output. This guide explores the technical strategies required to ensure your content feeds the algorithms defining the future of search.

Understanding RAG: How AI Retrieves Content

To rank in AI, you must understand the mechanism of Retrieval-Augmented Generation (RAG). Unlike traditional crawling which indexes URLs based on keyword density and backlinks, RAG pipelines work in three steps:

- Retrieval: The AI searches its vector database or live web index for relevant chunks of text.

- Augmentation: It feeds these chunks into the context window of the LLM.

- Generation: The model synthesizes a natural language answer based on the retrieved data.

Key Takeaway: AI engines prefer concise, fact-dense content that is easy to parse. Fluff and rhetorical styling reduce the "information density" score, making your content less likely to be retrieved for the context window.

SEO vs. GEO: Key Differences

The transition to AI search requires a pivot in KPIs and tactics. Below is a comparison of how optimization strategies differ between traditional search engines and generative engines.

| Feature | Traditional SEO | AI Search (GEO) |

|---|---|---|

| Primary Goal | Click-Through Rate (CTR) | Citation & Brand Mention |

| Ranking Signal | Backlinks & Keyword Density | Semantic Relevance & Entity Authority |

| Content Format | Long-form, Skyscraper Content | Structured Data, Direct Answers |

| User Intent | Navigational / Transactional | Informational / Conversational |

| Success Metric | Traffic & Sessions | Share of Voice (SOV) in Output |

Adapting to these metrics is crucial. While backlinks still signal authority, the context of those mentions matters more to an LLM than the mere existence of the link.

Optimizing for Entities and Knowledge Graphs

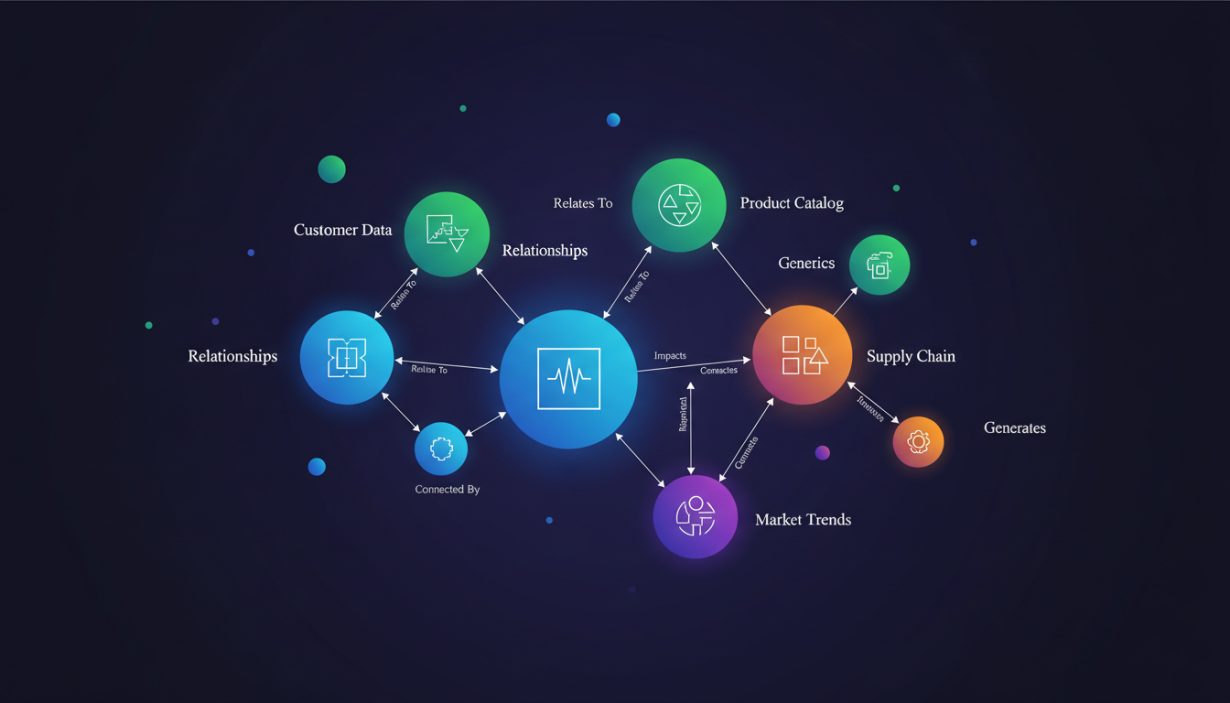

AI models rely heavily on Knowledge Graphs. To rank, your brand and content must be recognized as a distinct named entity with clear relationships to your industry topics.

1. Corroborative Content

LLMs hallucinate less when facts are corroborated across multiple high-authority sources. Ensure your data aligns with consensus on Wikipedia, Wikidata, and industry-leading publications.

2. Semantic Proximity

Keep your content thematically tight. If you are a SaaS provider for technical SEO tools, avoid drifting into unrelated lifestyle topics. LLMs build association vectors; dilution weakens your entity strength.

Technical Implementation: Schema and Structure

Structured data is the language of AI. While Google uses Schema.org to generate rich snippets, LLMs use it to disambiguate facts.

Priority Schema Types for AI:

- FAQPage: format Q&A specifically for NLP (Natural Language Processing) extraction.

- Article: Ensure

authorandpublisherfields are distinct to build E-E-A-T. - Dataset: If you provide data, wrap it in schema so code interpreters can easily ingest it.

Furthermore, use HTML5 semantic tags strictly. <article>, <section>, and <aside> tags help the parser understand the hierarchy and importance of text chunks during the retrieval phase.